I like Ezra Klein. I read his book on polarization, I listen to his podcast, and I read as many of his New York Times articles as I can. Maybe it is his Brazilian ancestry, or maybe it is the way he logically argues his positions. I am glad that he has joined the recent dialogue about AI with this opinion piece: “The Surprising Thing A.I. Engineers Will Tell You if You Let Them.”

In this Op-ed, Mr. Klein compares the US and European emerging approaches to AI regulation and posits that the EU's attempt at regulating use cases in the era of generative AI is a fool's errand (my choice of words). He then continues to dissect the American proposal and introduces us to the approach by China. The latter serves as an example of the risks one faces when inviting an autocratic regime to be the regulator.

While I disagree with the idea of pausing AI development, if nothing else, for practical reasons, I applaud the initiative by the Future of Life Institute to put the question on the table. My take is that we should accelerate the adoption of principles of AI ethics and, in parallel, quickly enact regulation to affect the incentive systems and, therefore, behaviors by the key players in the industry. Mr. Klein’s five categories are a good starting point: interpretability, security, evaluation & audits, liability, and humanness.

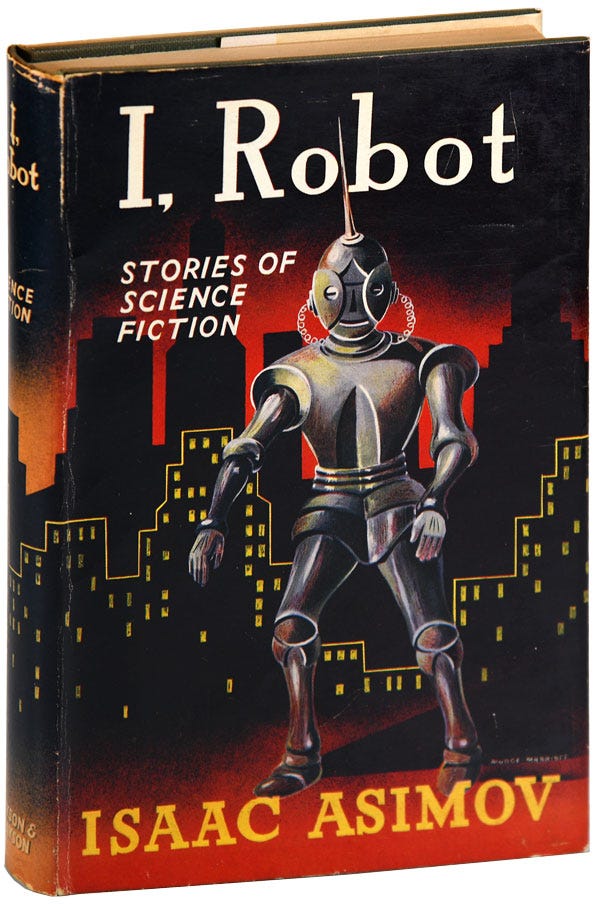

This is not a new field and some of the questions that we are facing are technology agnostic: they are societal decisions, ethical decisions, that we are finally being forced to confront. Let’s go back to the Sci-Fi classics for inspiration. In 1942 (yes, the date is not a typo), Isaac Asimov wrote the three laws of robotics. He devised these as a vehicle to express his creativity, but they lay a good ethical framework for today’s debate:

1. A robot may not harm a human being, or, through inaction, allow a human being to come to harm.

2. A robot must obey orders given it by human beings, except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection doesn’t conflict with the First or Second Law.

Watch the attached 1965 short clip where you can hear Mr. Asimov commenting about the laws. Additionally, he is incredibly prescient in laying out the ethical cyborg dilemma that humans and our creations will confront as we blur the threshold between the two. Sounds familiar?

We are writing the next chapter in this Sci-Fi journey. This time, the future is no longer almost a century away, it is around the corner.